We still underestimated Nvidia.

Even with a market cap exceeding $4 trillion, we still underestimate Nvidia—massively underestimate it.

In many people's minds, Nvidia remains that “nuclear bomb factory.” In reality, beyond the chips urgently needed for AI, Nvidia has always harbored ambitions for a cloud platform.

In 2023, Nvidia's DGX Cloud made a high-profile debut. Each instance featured eight H100 chips, priced at $36,999 per month—a ceiling-high rate that signaled Nvidia's challenge to established cloud giants like AWS and Azure.

Yet by mid-2025, this “favorite child” quietly stepped down from center stage. NVIDIA redirected its multi-billion-dollar cloud spending commitments away from DGX Cloud, shifting its role toward internal infrastructure and R&D rather than serving as a flagship product for the enterprise market.

Replacing it is Lepton, a newly launched GPU leasing and scheduling marketplace in 2025. By definition, the new Lepton is a computing power management and distribution platform—more akin to an “entry point” for computing demand.

Why did DGX Cloud exit? Can Lepton carry forward NVIDIA's ambition to dominate cloud computing in the AI era?

- Retreating to Advance

Many may recall the 2023 “GPU shortage,” where companies often couldn't purchase H100s despite having the funds. NVIDIA capitalized on this by launching DGX Cloud, offering its high-end computing clusters on a monthly rental basis for immediate enterprise access.

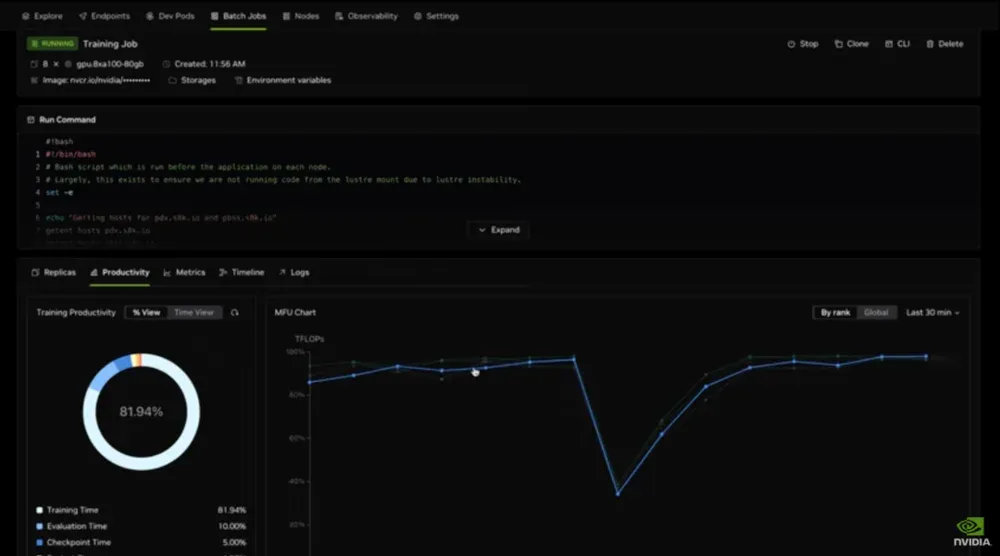

In its first year, DGX Cloud indeed gained significant traction. By the end of 2024, Nvidia's financial reports showed its software and services revenue (including DGX Cloud) had reached an annualized level of $2 billion.

However, the turning point came in the second half of 2024. As GPU supply gradually eased, cloud providers like Amazon, Microsoft, and Google began aggressively cutting prices, eroding DGX Cloud's competitive edge. Take Amazon's AWS as an example: its price cuts for H100 and A100 instances reached up to 45%, significantly undercutting DGX Cloud's rental rates.

For customers, the scarcity premium of DGX Cloud quickly lost its footing.

A more pressing concern lies in “channel conflict.”

Amazon, Microsoft, and Google are Nvidia's largest chip buyers, providing significant revenue support. The direct customer model of DGX Cloud means Nvidia is competing with these partners for business. Every DGX Cloud contract signed risks cannibalizing revenue from AWS, Azure, or GCP.

Buyer concerns are predictable. The longer-term consequence is pushing these partners to ramp up their own chip development—like AWS's Trainium and Google's TPUs—to reduce their structural dependence on Nvidia.

Moreover, building stable customer loyalty remains challenging in the short term. Some enterprises may treat DGX Cloud as a temporary solution, renting it for a few months during periods of tight capacity or urgent project launches, before migrating back to their long-term partners like AWS, Azure, or Google Cloud.

Considering these factors, NVIDIA's latest fiscal year financial disclosures no longer attribute massive cloud spending commitments to DGX Cloud. While the service remains listed under revenue categories, its role has clearly shifted toward internal infrastructure. In other words, DGX Cloud persists but is gradually retreating into NVIDIA's internal operations, abandoning direct competition with Microsoft, Amazon, Google, and others.

Beyond transitioning DGX Cloud for internal use, NVIDIA has redirected external attention toward a new platform-based entry point:

Lepton.

Launched in May 2025 under the DGX Cloud umbrella, Lepton differs fundamentally from DGX Cloud's model of directly leasing Nvidia's own AI chips to customers. Lepton does not touch GPU inventory at all; its sole function is to “direct demand to the appropriate cloud service provider,” including AWS, Azure, and cloud providers Nvidia has cultivated itself.

“Lepton connects our global network of GPU cloud providers with AI developers,” stated Jensen Huang, Nvidia's founder and CEO, during the Lepton launch. The goal is:

“To build a global-scale AI factory.”

In short, to avoid competing with its channel partners, Nvidia's strategic focus has shifted away from a “Nvidia Cloud.”

- NVIDIA's “Circle of Friends”

However, the phasing out of DGX Cloud does not mean NVIDIA has abandoned the cloud. Over the past two years, NVIDIA has been actively nurturing its own cloud service provider “partners.” There have even been seemingly counterintuitive scenarios—NVIDIA selling GPUs to cloud partners while simultaneously leasing computing power back from them.

Take CoreWeave as an example. Not only did Nvidia invest $100 million in CoreWeave in 2023, but it also prioritized supplying H100 GPUs to the company. During periods of supply shortages, this made CoreWeave one of the few cloud providers capable of offering Nvidia GPUs at scale. Nvidia then turned around and leased these very GPUs back from CoreWeave.

Lambda, a much smaller GPU cloud provider, follows a similar model. In September 2025, Nvidia signed a $1.5 billion, four-year lease agreement with Lambda, including the rental of 10,000 servers equipped with its top-tier GPUs, valued at approximately $1.3 billion. This deal made Nvidia Lambda's largest customer, with Nvidia's own R&D teams using these GPUs for model training.

Specifically, NVIDIA first generates immediate revenue by selling chips, improving its financial reports and satisfying shareholders. Partners then secure stable cash flow by leasing out GPUs, enabling them to scale operations. Subsequently, NVIDIA locks computing power under its control through leasing agreements, ensuring uninterrupted supply during critical periods. This approach mirrors how ordinary companies opt for cloud services over building their own data centers, offering greater flexibility to manage R&D peaks and troughs.

The ingenuity lies in the timing: revenue from chip sales is immediately booked, while expenses are deferred. Rental payments are gradually amortized over future years as operating costs. Simultaneously, NVIDIA avoids building data centers, minimizing asset burdens.

For partners, NVIDIA becomes their largest client. The linkage between computing power and cash flow strengthens ecosystem loyalty. More subtly, this approach stabilizes market sentiment. When even NVIDIA is willing to lease back at this price, it effectively endorses the AI computing market, anchoring price expectations—ultimately achieving three objectives with one move.

This model has been replicated across NVIDIA-affiliated cloud computing startups: sell chips → invest in clients → lease back services → sell chips again, creating a self-sustaining AI chip ecosystem funding loop.

Simultaneously, NVIDIA leverages its dedicated venture arm, Nventures, to bet on the broader AI ecosystem. It invests in numerous startups spanning model development to application-layer ventures. While not all focus on cloud services, the logic remains consistent: by investing, it binds potential customers and future ecosystems. As these startups grow, their computing demands will inevitably translate into purchases of NVIDIA chips.

It's fair to say Nvidia isn't merely selling chips—it's operating a massive AI startup incubator to build a “Nvidia-affiliated” cloud ecosystem.

- The “App Store” of AI Computing Power

Returning to Lepton, its essence shares a similarity with Nvidia's “sell one hand, rent one hand” approach: it acts as a “market maker” for computing power.

Unlike DGX Cloud, which deals directly with customers, Lepton doesn't operate cloud services itself. Instead, it acts as a “traffic dispatcher,” routing customer tasks to data centers run by ecosystem partners.

At its core, it's a computing power marketplace. Users simply submit their requirements on the Lepton platform, and Lepton automatically matches them with available H100 or Blackwell GPUs—regardless of whether that hardware resides in CoreWeave, Lambda, AWS, or Azure data centers.

Moreover, Lepton integrates all GPU cloud resources under NVIDIA's software stack, such as the NIM microservices and NeMo framework. Developers need not concern themselves with underlying providers, gaining a consistent development experience and environment solely through the Lepton platform.

Crucially, Lepton resolves the conflict between NVIDIA and cloud giants. Under this model, NVIDIA is no longer a direct competitor to AWS or Azure but operates as a neutral scheduling platform. AWS and Microsoft have joined Lepton for a simple reason: this marketplace grants them access to additional computing demand.

For NVIDIA, the risk of confronting partners is too high. It's far wiser to step back and become the orchestrator and controller of the computing power marketplace. This exemplifies the proverb “Step back to gain a broader horizon.” By avoiding confrontation with partners, Nvidia maintains control over the ecosystem gateway. Regardless of which cloud provider customers choose, they ultimately rely on Nvidia's GPUs and software stack.

Thus, abandoning its own cloud service is not a sign of weakness. Lepton represents a win-win strategy for Nvidia, allowing it to have its cake and eat it too.

Of course, Lepton's rollout hasn't been entirely smooth sailing. Some smaller cloud providers worry that NVIDIA might use it to infiltrate customer relationships or influence pricing—it won't change the game overnight. But with both AWS and Azure now in the market, Lepton's presence can't be ignored. Cross-cloud scheduling of future AI computing power may well be handled through a single platform.

For developers, “where to compute” becomes secondary; What matters most is whether computation is possible, how quickly it can be done, and at what cost—precisely the unified experience layer Lepton aims to deliver.

NVIDIA no longer needs to build its own “NVIDIA Cloud” nor clash head-on with partners at the IaaS layer. Its goal is to pull all players into its ecosystem, compelling every cloud provider to use NVIDIA GPUs, invoke NVIDIA frameworks, and execute procurement and scheduling through NVIDIA's gateway.

If successful, what appears merely as a GPU aggregation marketplace could become the control panel for the computing power world. Who gets the orders, who survives—the allocation power rests with NVIDIA. The longer-term gains lie in data and insights: during cross-cloud scheduling, Lepton naturally observes which task types are most active, which regions face greater strain, which GPU generations see heavier usage, and how price elasticity plays out—all of which inform business decisions.

Much like Apple's control over mobile internet through the App Store, NVIDIA aims to achieve the same with Lepton—only swapping apps for computing power.

This explains Nvidia's current market logic. Simply put, it doesn't need to own the cloud—it only needs to control the compute stack and demand entry points. As long as global AI training and inference remain centered on its GPUs, whether compute power ultimately resides on Amazon AWS, Microsoft Azure, Google GCP, CoreWeave, or Lambda, and regardless of which provider customers choose, Nvidia captures value by controlling the value chain.

From AI chips to DGX Cloud and now Lepton, Nvidia's strategy has long transitioned from hardware to “computing power” and “platforms.” Any company whose market cap surpasses $4 trillion and whose products have become essential production resources in the AI era must harbor greater ambitions, wouldn't you agree?